In the era of rapid technological advancements and digitalization trends, the use of generative AI has evolved beyond a mere buzzword. It is reshaping the way businesses function. By enabling tasks such as content creation automation and tailored customer engagements AI has opened up opportunities, for productivity and innovation. However, alongside these benefits comes the importance of exercising caution.

Here are some reasons why controlling the output of generative AI systems is important

The Power and Risks of Generative AI

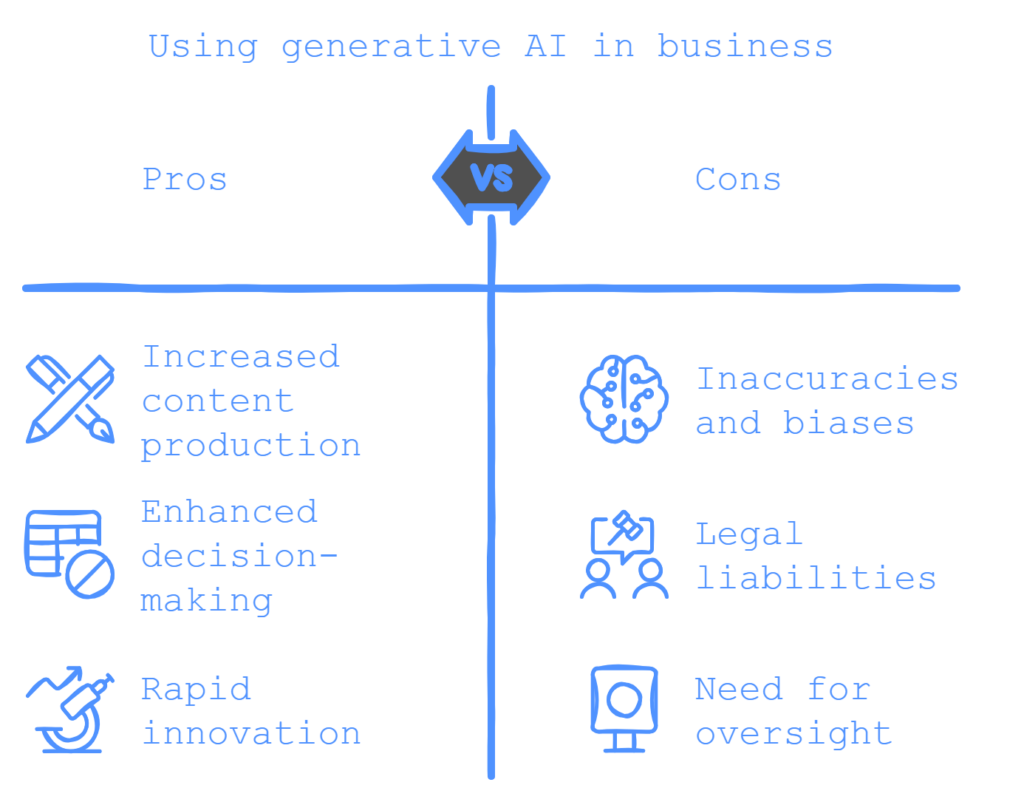

Generative AI involves systems that produce content or solutions based on input data sets. They are sophisticated and can generate forms of content like text, written materials, videos, and even intricate data patterns. This technology provides companies, with the capacity to increase content production support decision-making, and drive innovation rapidly. However, this capability also requires management and oversight of the generated output.

Businesses need to focus on managing AI outputs to address the potential inaccuracies and biases that may arise from relying too much on the data used to train these systems. If the data feeding into these AI models is biased or outdated, there is a risk that the AI responses could unintentionally reinforce or worsen those biases.

Furthermore, intellectual property emerges when AI systems produce content that closely resembles existing creations. In the absence of safeguards, companies could unknowingly subject themselves to legal liabilities by utilizing AI-generated materials that bear similarities to copyrighted works. Businesses must adopt mechanisms that oversee and evaluate the uniqueness of AI-generated content.

Ensuring Accuracy and Brand Consistency

In a competitive marketplace, a single mistake in communication or an error in AI-generated reports can tarnish a company’s reputation. Hence, it is essential to ensure the accuracy and brand consistency of your business.

Take the example of a marketing team using AI to generate product descriptions or customer emails. AI is indeed very useful in improving the communication process, but if the AI generates content that doesn’t align with the company’s brand voice or misrepresents product features, it could lead to miscommunication with customers and damage the brand’s credibility.

Human control is important in verifying AI outputs. Since AI lacks contextual understanding and judgment, businesses should always have a review at some key states to ensure AI-driven content is accurate.

Ethical Considerations and Compliance in AI

Beyond brand management, the widespread use of generative AI raises questions about AI ethics, particularly in industries such as finance, and healthcare, where the accuracy and integrity of information are paramount.

An AI-generated report that misinterprets market trends could lead to faulty investment decisions. As a result, it costs more than we can imagine. Moreover, in the healthcare industry, in circumstance that the AI medical diagnostic market is forecasted to emerge dramatically by 23.2% in 2028. It should be tightly regulated to avoid any mistake that can lead to life-altering consequences.

Furthermore, businesses are facing regulatory requirements related to AI usage as new laws and regulations regarding data privacy are being introduced globally. Therefore, we must guarantee that their AI applications adhere to norms to stay compliant. This becomes particularly crucial with data protection statutes such, as GDPR that impose rules on handling and sharing data.

To Sum Up

As businesses continue to integrate generative AI into their day-to-day activities, controlling its output is crucially vital. It should be carefully managed and controlled rather than just overlooked. This oversight plays a role in harnessing the complete capabilities of AI technology while also safeguarding the business’s interests.

Businesses must find a way to blend innovation with accountability successfully to thrive and maintain a positive reputation among consumers and stakeholders alike. By implementing controls that verify, refine, and regulate the outputs of generative AI systems, companies can protect their brand, build trust with their customers, and ensure that AI becomes a tool for long-term success.

The Key Skills of Effective Prompt

The Top Trends and Technologies Reshaping the Software Industry